One of the most prominent issue when it comes to working with Tableau is speed issues. Mostly present in production environments within larger companies. Some of the time, the slowness is due to the very large files that are imported into Tableau. Im talking about dealing with millions and billions of records in this case. There is no perfect solution for everyone who has this problem since a lot of the factors vary from case to case. Hardware, internet speed, the shape and type of data that you work with and many more reasons. However, in this blog, I will be going though some points in which everyone with this issue can consider and apply if applicable. Lets get on to the first point of consideration:

#1 – Use Minimal Custom SQL if needed

Using custom SQL in Tableau is a way of connecting to specific queries rather than connecting to the entire dataset. This is only applicable for certain databases also and the syntax also does change from database to database. Using custom SQL can be quite powerful while using Tableau. It is potentially easier for certain users who are proficient in SQL to use Tableau for connecting to the desired data. However it does have its limitations.

In some cases, analysts rely completely on custom SQL queries and bypass using Tableau’s own data engine. It is best practice to use custom SQL in conjunction with Tableau’s data engine while keeping the custom SQL as simple as possible. If using custom SQL is necessary. Only relying on custom SQL can result in long and confusing queries during your analysis. This is because with each query Tableau creates, it wraps it around the Custom SQL query that is written. Therefore, with longer custom SQL queries where you specify grouping, ordering, joining etc, the generated queries will only make it more confusing and longer. Resulting in slower performance. Which is not something you would want while working with large files.

Recommendations

Below are some recommendations on what you can do to optimise your custom SQL query:

- Use initial SQL: This technique will eliminate your custom SQL query being run multiple times. The initial SQL query is only run when the workbook is first opened. It can also be used to create a temporary table. WIth larger databases, this can help boost performance. One drawback of this method however is that the temporary table will remain the same during the duration of the session. SO updates to the underlying data will not reflected until a new session is opened.

- Use very simple custom SQL and leverage Tableau’s data engine: Make your custom SQL query as simple as possible if you have to use custom SQL. Examples include not including every column with (SELECT *). Select only the desired columns and let Tableau’s data engine take care of relationships. With mixing both, you make querying more dynamic within Tableau.

#2 – Extracts

Number two on the list for working with large files with Tableau is the use of data extracts. This tip is one of the most recommended tips given to customers that complain about slow dashboard performance. Using extracts rather than establishing a live connection to data can save you a lot of trouble. In short, a data extract is a subset copy or a full copy of the underlying data that is stored locally. Rather than having a live connection to the data. In analogical terms, it is like downloading a TV show on your phone from Netflix versus streaming one on demand. To freshen up your knowledge on data extracts, visit this link for more information.

While working with large files in your analysis, chances are that you do not need every column and row in the dataset. With a live connection, you are already connecting to the whole table from the start. Even if you filter out fields afterwards. You should get rid of what you do not need first, that is where extracts can come in handy. I am not going to go over how to create an extract in this post. You can find out how in the link above. However, I will go though what you should watch out for when creating an extract in Tableau Desktop below:

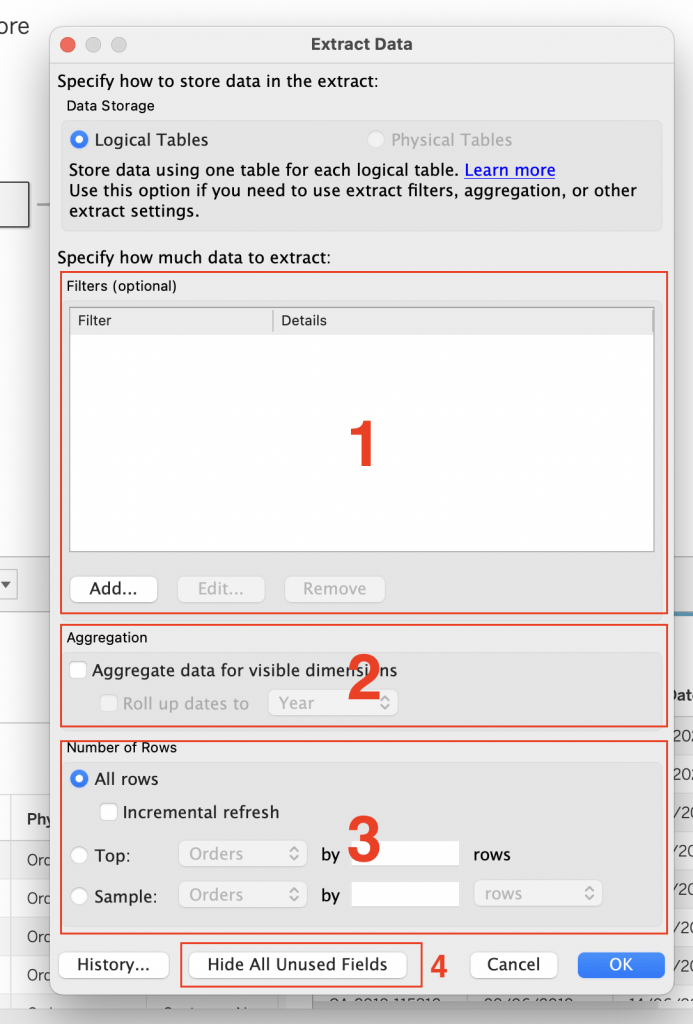

Data Extract Window

- Filters: This is the section where you specify the filtering of your extract. Choose which columns to include, specify conditions etc to limit the size of your extract.

- Aggregation: Through aggregating for visible dimensions, you essentially limit the row-by-row nature of the dataset and have an aggregated value for each dimension. The default aggregation is SUM. This can minimise the size of your extract and increase performance. You can also roll up the dates to the specified date part for more aggregation such as make all dates years.

- Number of Rows: In this section, you can specify the number of rows to extract either with a top filter or sample from a specific dimension. In addition, you can specify an incremental refresh which adds rows that are new since the last time the data is refreshed.

- Hide all Unused Fields: As the title says, this button hides all unused fields in the extract so that maximum speed is reached. Including unused fields in the extract is just another unnecessary load on processing times.

In most cases, large files result in slower processing times in Tableau. Therefore reducing the size of the extract by filtering out the unnecessary data will result in faster dashboards.

#3 – Transforming Data to its Optimum Level

This third tip might not be the best tip if we are only looking at fixes to do in Tableau Desktop, however with additional ETL (extract-transform-load) tools, our data is able to reach its optimum shape that is ready for analysis. Sometimes the way that the database tables are set up are not optimum for doing data analysis immediately. Sometimes they require more advanced preparations techniques that Tableau Desktop offers. Applications such as Tableau Prep or Alteryx are built for turning messy, unoptimised tables into analysis ready tables. For example, certain data types are faster to process than others like booleans to integers. If you are checking if a condition is true and it is very important for your analysis, create a new column and add the true false cause before importing the data into Tableau Desktop. Examples like these help working with large files more efficient.

Final Remarks

I have listed my personal list of the most important points to consider while dealing with large files in Tableau Desktop. In addition to this list, the is quite a lot more things that can be done to improve performance since there are quite a lot of factors weighing into performance problems. Sometimes the performance issues can come from having too many marks and visualisations on a dashboard where the rendering times take a while. Sometimes it can be that the underlying database is overloaded and processing times take a while, even when you have a dataset that is relatively small. Or sometimes it is due to the size of the database that you are doing analytics on. If you would like to know more about performance increase in Tableau in general, click this link.

Check out our blogs page to view more blogs on Tableau, Alteryx and Snowflake here.

Work together with one of our consultants and maximise the effects of your data.

Contact us, and we’ll help you right away.